Why Ethics Matters In AI

Sameer Neupane

Imagine you are going for a job interview in the near future. Instead of presenting your résumé to HR, you place it in a machine, which notifies you that you have been rejected because you are a woman. Alternatively, you may apply for a loan with a bank and be denied because you belong to a certain ethnic group. Alternatively, you upload a photo to a social media site and have it used by a security company for surveillance purposes without your knowledge/consent. Our innate instincts tell us we don't want to live in this world. But, believe it or not, the scenarios described above, or ones close to them, are actually taking place. All of these have one thing in common: they all involve the usage of Artificial Intelligence (AI). Such examples raise the issue of AI ethics.

Ethical pitfalls

As AI has become increasingly important in a variety of industries, including health care, banking, retail, and manufacturing, questions regarding AI ethics and why it needs our attention have arisen. Even if companies have ethically commendable or ethically neutral intentions while designing or deploying AI systems, there is always the risk of unintentionally falling into ethical pitfalls. Even if they don't want to be held responsible for things like invasions of privacy, inadvertently discriminating against women and people of colour, or tricking people into spending more money than they can afford, ethical concerns may arise as a result of not thinking through the consequences, not monitoring AI when deployed in the field and not knowing what to look for when developing or deploying AI.

Biased AI, black-box models, and privacy concerns are three of the most discussed issues in AI. When AI results are skewed, they can't be generalised and have unacceptably varied effects on distinct subpopulations. This could be due to preferences or exclusions in the training data, but bias can also be introduced by the way data is collected, algorithms are built, objective functions are chosen, and AI outputs are interpreted. In the case of the resume rejection mentioned above, the AI system may have been trained on past data showing that men were more likely to get hired. That AI system may have picked up on a tendency that essentially translates to "we don't recruit women here." As a result, the resume was rejected.

An AI system analyses large amount of data, it "recognises" a pattern or patterns in the data, and then compares that pattern with novel inputs to produce a "prediction" about it. A black-box model is one in which the methods or modes of operation are not easily interpretable by humans, and we are unable to explain why the AI system produced the results it did. The training data for the AI system in the preceding scenario where the loan was declined could have included cases where loans were allowed largely for persons of a certain ethical class and were mostly disapproved for people of the ethnic group to which the applicant belonged.

As a result, the AI system learned a pattern of denying loans to anyone who belongs to that ethnic group. It is quite difficult to present a viable explanation to the person why his loan was declined unless we comprehend and explain why AI produced the output the way it did. If someone is developing artificial intelligence and wants it to be extremely accurate, they will want to collect as much data as possible. To put it another way, data is the fuel of machine learning, and it's typically data on people. Consequently, AI developers/companies are extremely motivated to collect as much data about as many people as possible, which may result in privacy violations.

Furthermore, an AI can make valid judgments about people that those people do not want companies to know about them by combining data from different sources. The use of one's photo for surveillance purposes by an AI system without their knowledge, as shown above, is a breach of privacy. Thus, certain formal systems for recognising and reducing ethical hazards must be in place. Bias mitigation options include obtaining more training data, selecting better proxies, employing fine-grained data, and creating a different objective function to avoid biased AI. By eliminating black-box models, we must focus our efforts on constructing explainable AI.

Regulatory compliance

Explainable AI might be defined as an explanation of how AI processes inputs in such a way that it produces outputs, or how a certain set of inputs produces a specific outcome. There are three main components to privacy. Regulatory compliance, data integrity and security (cybersecurity), and ethics/data stewardship are all important considerations. Anonymity and privacy are frequently confused. In the context of AI ethics, privacy is best defined as the degree to which people have access to and control over their data without being subjected to undue pressure. The privacy of data should be of utmost priority in any AI development and deployment.

As AI is pushing its own boundaries, it promises game-changing benefits such as increased efficiency, lower prices, and faster research and development. Today, AI has become essential across a vast array of industries, including health care, banking, retail, and manufacturing. As AI has rapidly and significantly benefitted human society and the ways we interact with each other and it will continue to do so, it has also presented ethical and sociopolitical challenges. If such ethical considerations are ignored, AI systems may cause more social harm than good. Hence, all stakeholders, including developers, managers, employers, employees, businesses, and customers, should seek out a trustworthy AI system.

Recent News

Do not make expressions casting dout on election: EC

14 Apr, 2022

CM Bhatta says may New Year 2079 BS inspire positive thinking

14 Apr, 2022

Three new cases, 44 recoveries in 24 hours

14 Apr, 2022

689 climbers of 84 teams so far acquire permits for climbing various peaks this spring season

14 Apr, 2022

How the rising cost of living crisis is impacting Nepal

14 Apr, 2022

US military confirms an interstellar meteor collided with Earth

14 Apr, 2022

Valneva Covid vaccine approved for use in UK

14 Apr, 2022

Chair Prachanda highlights need of unity among Maoist, Communist forces

14 Apr, 2022

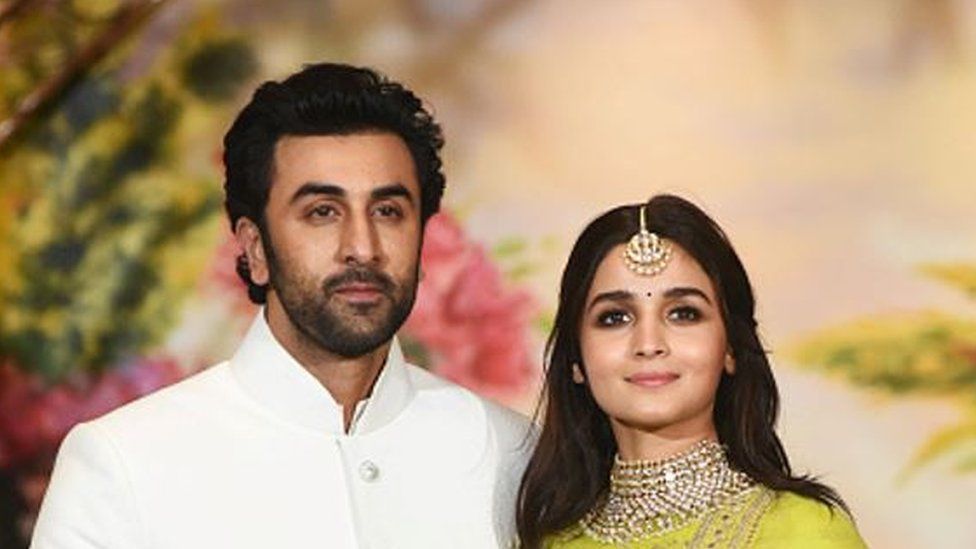

Ranbir Kapoor and Alia Bhatt: Bollywood toasts star couple on wedding

14 Apr, 2022

President Bhandari confers decorations (Photo Feature)

14 Apr, 2022